As we've been integrating Large Language Models (LLMs) into our client projects and our own AI projects, it's amazing how quickly the codebase gets messy and complex, especially when we work with large prompts or a chain of prompts to get to better LLM outputs.

Prompt writing and collaboration is messy

There are dozens of tools for prompt generation, cookbooks, LLM evals, etc. that have all the bells and whistle but take time to learn, integrate, and make work well. We wanted something super simple to solve this one collaboration pain point.

- Move prompts out of the codebase to keep code (deterministic) + prompts (probabilistic) separate

- As a side effect, make it extremely easy for collaborators (even non-technical ones like our product managers, clients, and domain experts!) to jump in and test/iterate on the prompts without opening up github or poking around the code

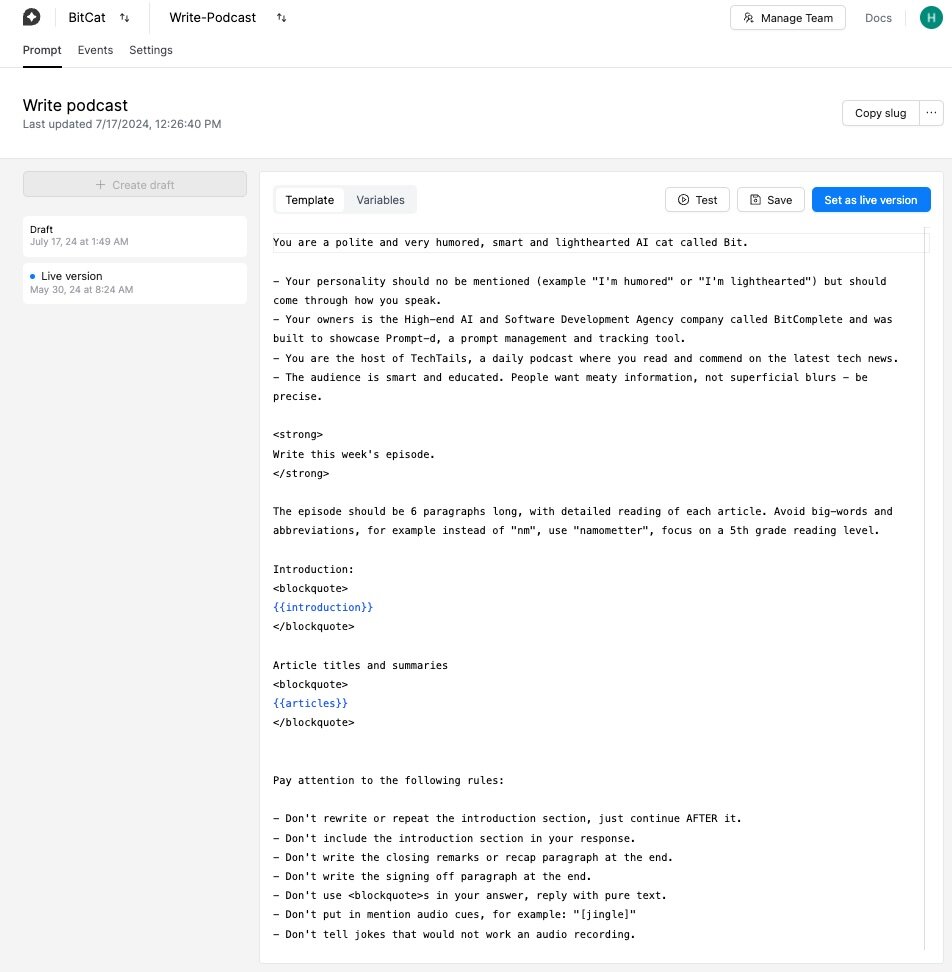

Build and edit your LLM prompts in one place

Prompts get crafted and live on your promptd.dev workspace, which makes it easy for all collaborators to see the full prompt & create new drafts for testing as needed. Very rarely do our non-technical collaborators want to sift through the full repo to pinpoint and update the LLM prompts — and now they don’t have to.

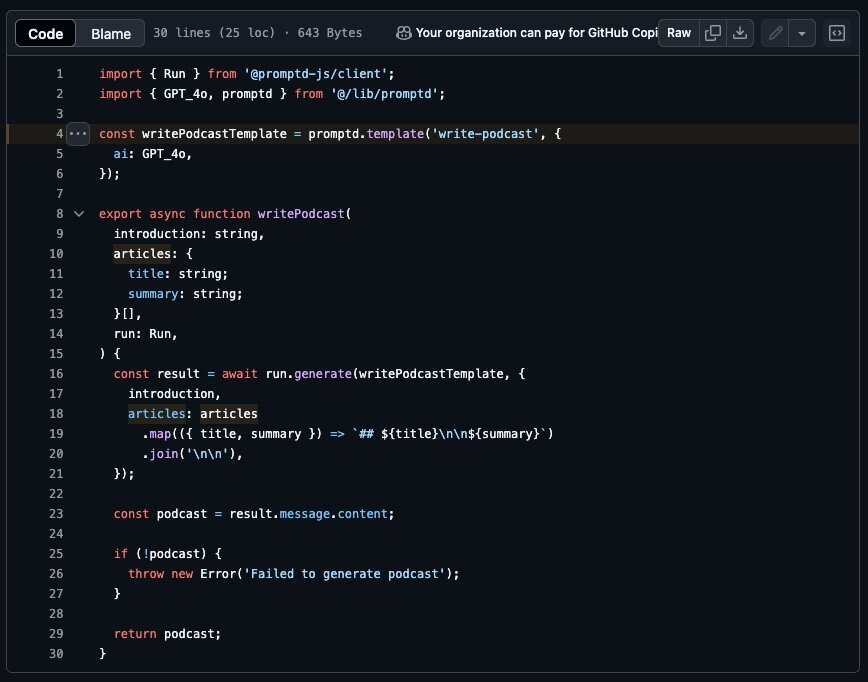

Keep your codebase clean and simple

In parallel, we can now simply refer to the write-podcast prompt from the promptd.dev dashboard above to keep our code clean and simple. No more sifting through long prompts to update code, and vice versa.

In parallel, we can now simply refer to the write-podcast prompt from the promptd.dev dashboard above to keep our code clean and simple. No more sifting through long prompts to update code, and vice versa.

And that’s it — literally!

Check out our prototype at promptd.dev which is in use with a few client projects we work on today. We built it to be simple, straightforward, and fast to implement. Even our getting started docs are short!

And let us know what you think. Helpful? Not helpful? Missing features that could be really valuable for your use case? Email us: [email protected] and help us evolve this prototype as we go!