The Impact of AI on Code Quality

AI tools like GitHub Copilot, OpenAI Codex, and ChatGPT are transforming how fast code is written. For example, Github Copilot Enterprise touts a “55% faster coding” capability on its landing page. But there is also growing concern about potential degradation of code quality based on three red flags: accelerating churn, increasing copy/pasted code, and decreasing moved code.

AI-generated code relies on a vast dataset of existing code, which includes both high-quality and poorly written programs. Consequently, the output can be a mixed bag — sometimes excellent, sometimes requiring significant refinement. This inconsistency presents a challenge: how can we ensure that the speed of AI-assisted coding does not compromise the quality of the final product?

Tracking Cognitive Complexity: A Guardrail Against Declining Code Quality

We've found that one low-overhead way to keep our code readable is to pair tooling such as SonarSource’s Cognitive Complexity scores alongside our AI-assisted code tools.

The concept of “cognitive complexity” aims to measure how difficult it is to understand a specific piece of code. The goal for the developer is to keep the cognitive complexity of methods and functions as low as possible in order to maintain code clarity and readability – to do so, factors such as nested conditionals, loop complexity, and the overall structure of the codes logic are assessed.

It measures the number of paths needed through a method, aiming to keep that number manageable. When complexity gets too high, this signals a need for refactoring to simplify the code.

In our experience, the code generated by code copiloting tools can be incredibly complex, confusing, and often not the most optimal way to code. By setting a limit, measures of Cognitive Complexity alert us when our code gets too gnarly, so we know when we have to refactor or move things around.

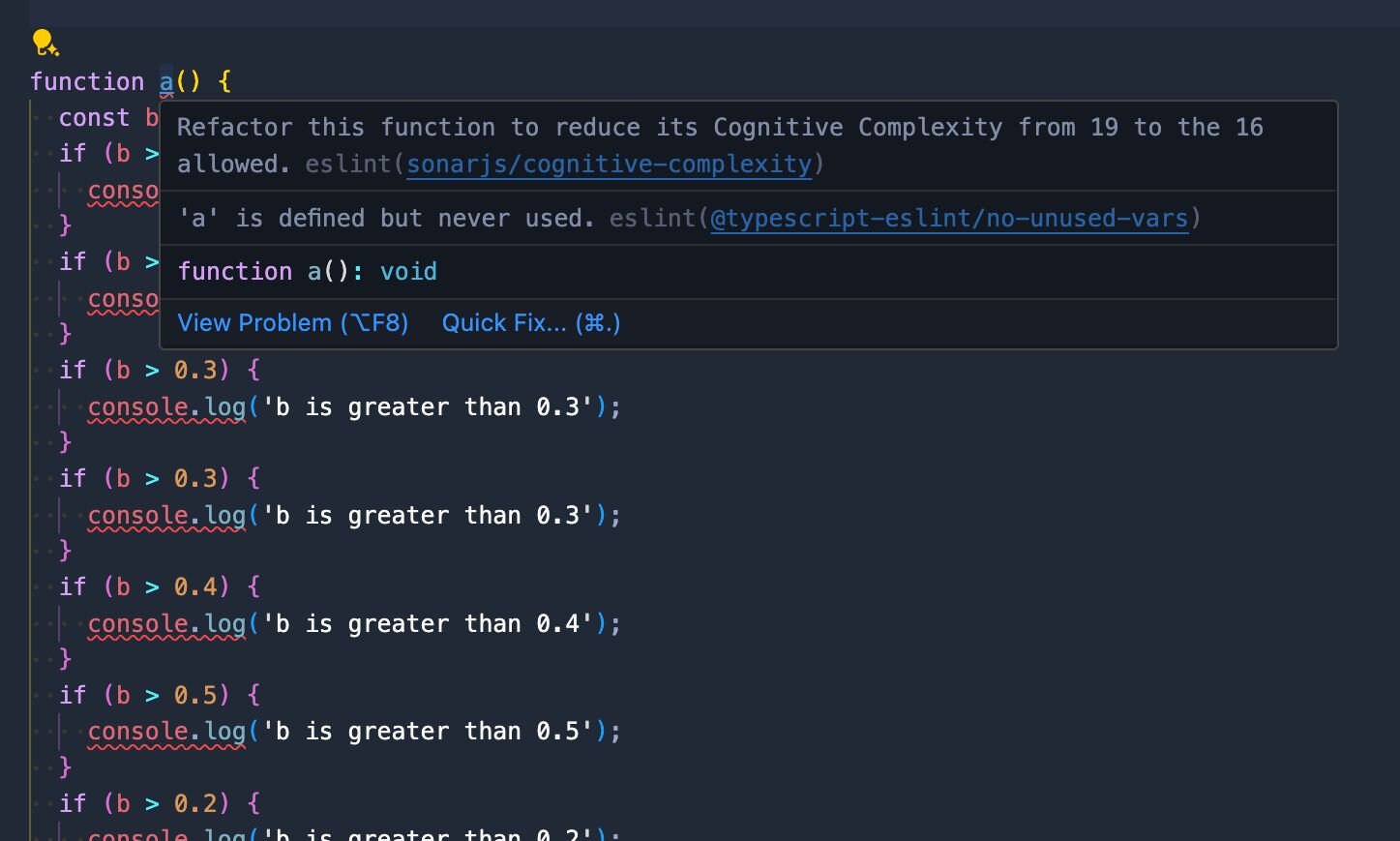

As an example, we use a setting of sonarjs/cognitive-complexity’: [‘error’, 16], which indicates to the system that any function or method with a cognitive complexity score exceeding a threshold of 16 needs to trigger an error. This number attempts to capture the number of conditional or iterative operations and their level of nesting, and accrues based on nesting depth.

Applying Cognitive Complexity in Practice

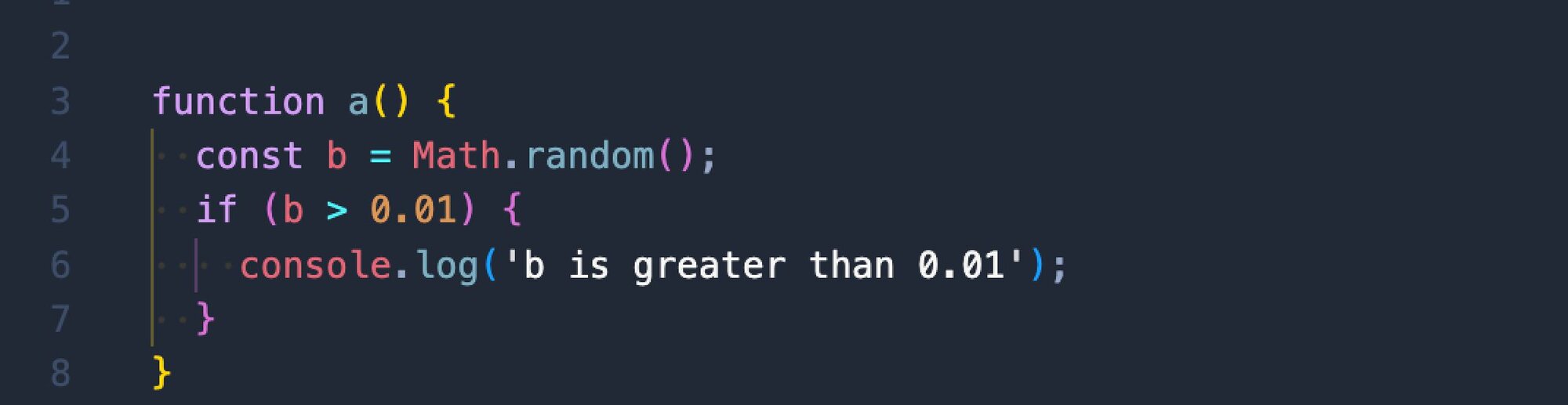

Take this simple function as an example. The cognitive complexity is 2.

But when we do a bunch of these, a message pops up and alerts us that the cognitive complexity is too high because there’s a larger number of possible paths that can be taken inside the function. At this point, we know the code needs a refactor.

Balancing Innovation with Rigor

As AI continues to transform the world of software development, balancing accelerated development and code quality is vital. Implementing systematic safeguards to measure things like cognitive complexity ensures that AI tools can be leveraged effectively without sacrificing standards essential to good software.